Putting the Kappa Statistic to Use - Nichols - 2010 - The Quality Assurance Journal - Wiley Online Library

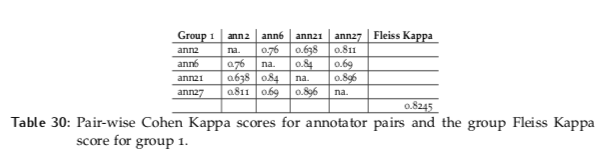

Inter-Annotator Agreement (IAA). Pair-wise Cohen kappa and group Fleiss'… | by Louis de Bruijn | Towards Data Science

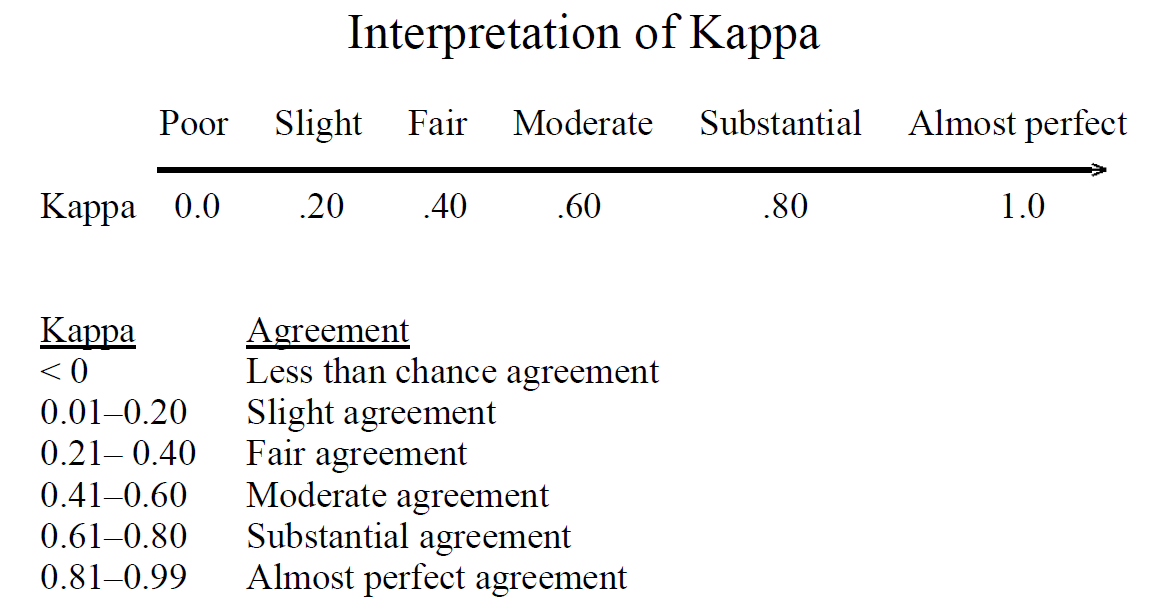

Interpretation of Kappa Values. The kappa statistic is frequently used… | by Yingting Sherry Chen | Towards Data Science

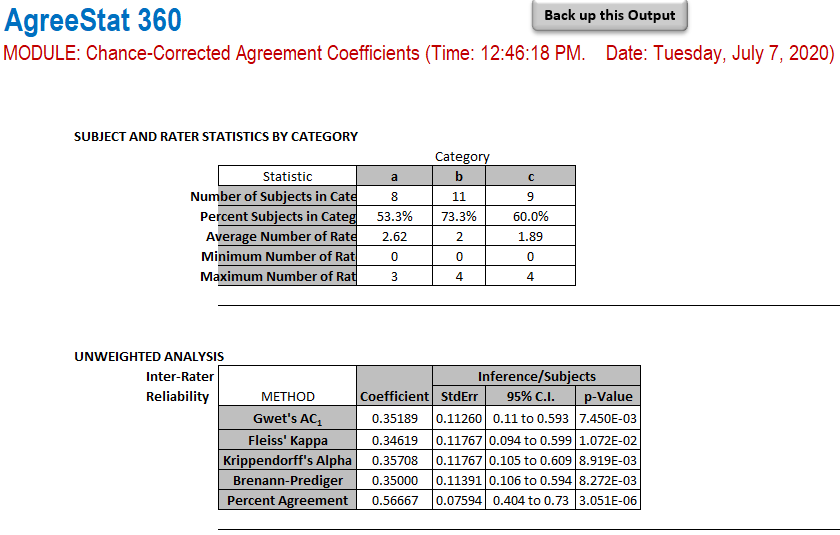

Interrater agreement statistics with skewed data: evaluation of alternatives to Cohen's kappa. | Semantic Scholar

Inter-rater agreements Category Percent Cohen's Kappa agreement (a)... | Download Scientific Diagram

Inter-Annotator Agreement (IAA). Pair-wise Cohen kappa and group Fleiss'… | by Louis de Bruijn | Towards Data Science

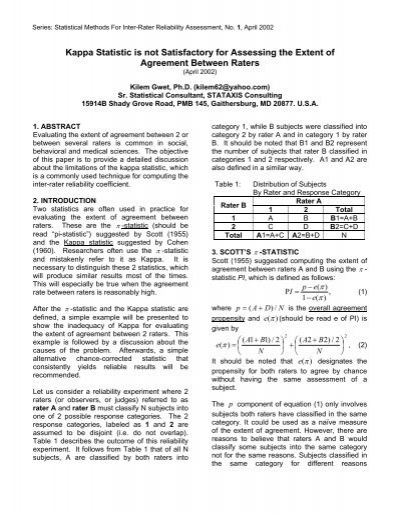

![PDF] Interrater reliability: the kappa statistic | Semantic Scholar PDF] Interrater reliability: the kappa statistic | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/bf3a7271860b1667e3ceb84e5bc400d2635ff8b7/3-Table2-1.png)